Docker is a tool designed to make it easier to create, deploy, and run applications by using containers. Containers allow a developer to package up an application with all of the parts it needs, such as libraries and other dependencies, and deploy it as a single package. This means that the application will run the same way on any other machine, regardless of the underlying system architecture or other differences between the machines. This can make it easier to build, test, and deploy applications, especially in a distributed environment.

Let’s start by understanding what VMs and containers even are.

What are “containers” and “VMs”?

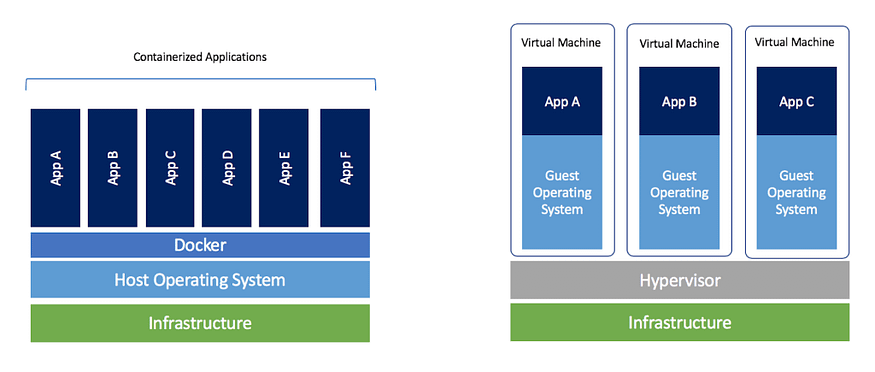

Containers and VMs are both technologies that are used to run applications in an isolated environment. However, they work in different ways.

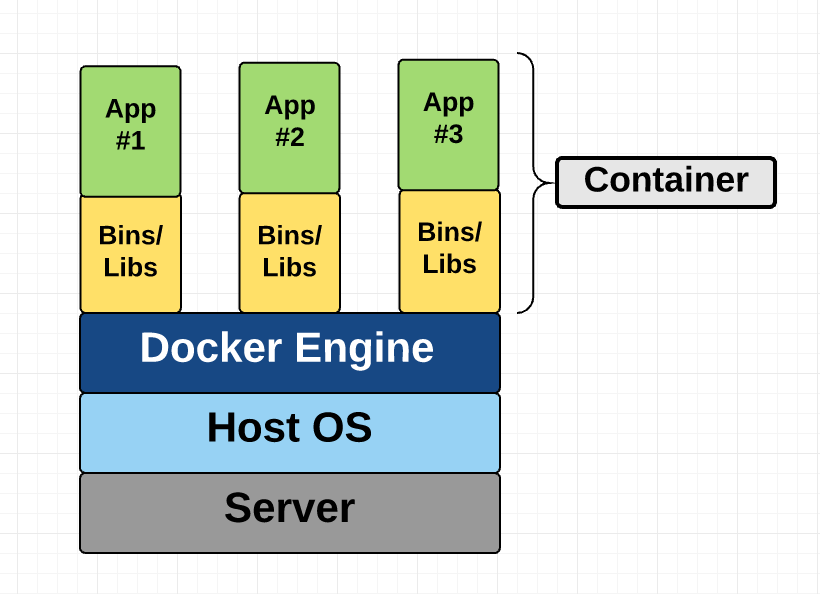

A container is a lightweight, stand-alone, and executable package of software that includes everything needed to run an application, including the code, a runtime, libraries, environment variables, and config files. Because containers include everything an application needs to run, they are very portable and can run on any machine that has the Docker runtime installed.

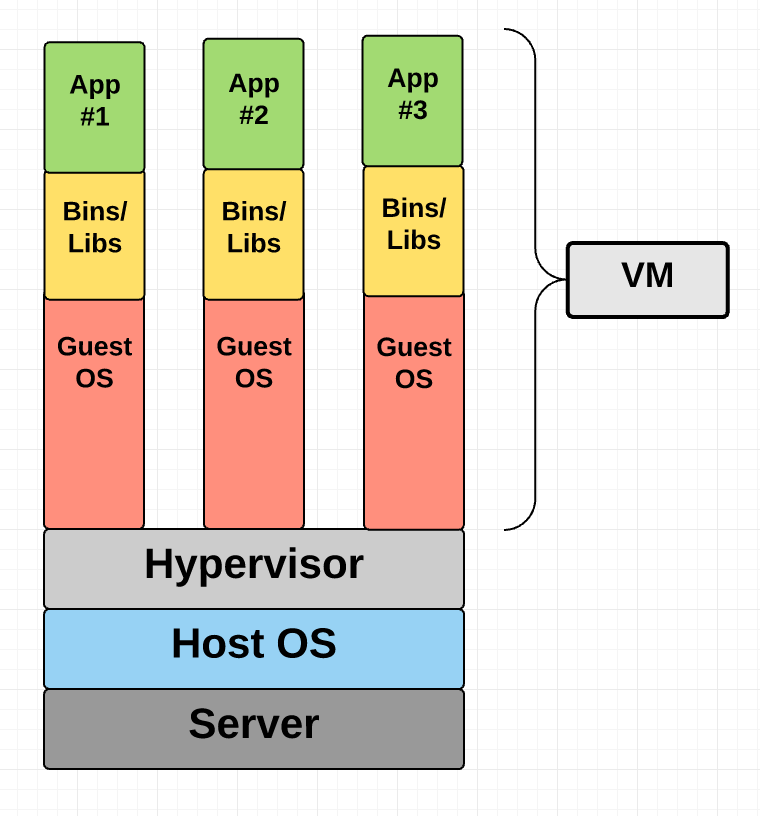

A virtual machine (VM) is a software-based emulation of a physical computer. When you create a VM, you create a virtualized version of a physical computer, complete with a virtual CPU, memory, storage, and network interfaces. VMs are typically used to run multiple operating systems on a single physical machine, allowing you to run applications that are not compatible with the host operating system. VMs are generally considered to be heavier and less portable than containers.

In summary, containers and VMs are both technologies that are used to run applications in isolated environments, but they work in different ways. Containers are lightweight and portable, while VMs are more heavy-weight and are typically used to run multiple operating systems on a single physical machine.

Virtual Machines

A virtual machine (VM) is a software-based emulation of a physical computer. When you create a VM, you create a virtualized version of a physical computer, complete with a virtual CPU, memory, storage, and network interfaces. This allows you to run an operating system and applications on the VM as if it were a physical computer, but in reality, it is running on top of your host operating system.

VMs are typically used to run multiple operating systems on a single physical machine, allowing you to run applications that are not compatible with the host operating system. For example, you could use a VM to run Windows on a Mac, or to run Linux on a Windows machine. This can be useful for testing or development purposes, or for running applications that have specific system requirements.

Because VMs are software-based emulations of physical computers, they are generally considered to be heavier and less portable than other technologies such as containers. However, they offer more flexibility and control over the underlying hardware and operating system, which can be useful in certain situations.

Container

A container is a lightweight, stand-alone, and executable package of software that includes everything needed to run an application, including the code, a runtime, libraries, environment variables, and config files. Containers are designed to be portable, so that an application can run the same way on any machine that has the Docker runtime installed, regardless of the underlying system architecture or other differences between the machines.

Containers are a popular way to package and distribute applications, because they allow developers to include all of the dependencies and libraries that an application needs to run, and to ensure that the application will run the same way on any machine that has the Docker runtime installed. This can make it easier to build, test, and deploy applications, especially in a distributed environment.

Containers are typically used in combination with a container orchestration tool, such as Kubernetes, which is used to manage and coordinate the containers that make up an application. This allows you to easily scale and manage your application across multiple containers and machines.

Where does Docker come in?

Docker is a tool designed to make it easier to create, deploy, and run applications using containers. It provides a platform for building, managing, and running containers, allowing you to easily create, deploy, and run applications in a containerized environment.

With Docker, you can package your application and its dependencies into a self-contained unit called a Docker image. This image can then be run on any machine that has the Docker runtime installed, ensuring that your application will run the same way regardless of the underlying system architecture or other differences between the machines.

Docker also provides tools for managing and coordinating multiple containers, including tools for scaling and orchestrating your application across multiple containers and machines. This makes it easier to build and deploy distributed applications and to manage and scale those applications as your needs change.

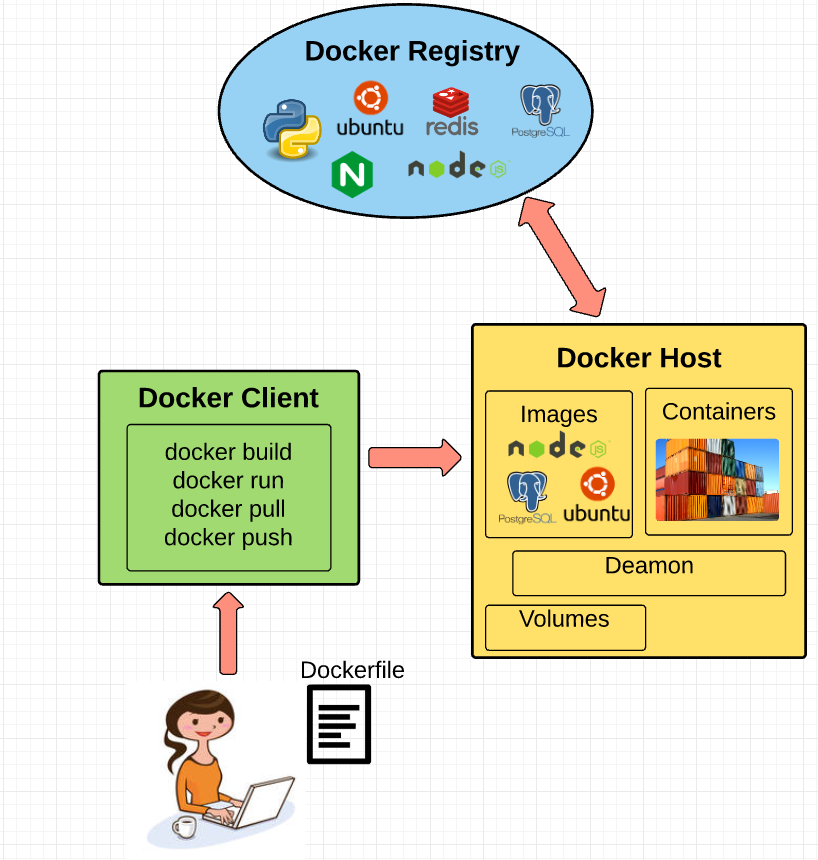

Fundamental Docker Concepts

After establishing the big picture, let's examine each of Docker's fundamental components in turn:

Images:A Docker image is a self-contained package that contains everything an application needs to run, including the code, a runtime, libraries, environment variables, and config files. Images are built using a Dockerfile, which is a text file that contains instructions for how to build an image.Containers:A Docker container is a running instance of a Docker image. When you run a container, you are creating a lightweight and isolated environment in which to run your application. Containers are designed to be portable so that you can run the same container on any machine that has the Docker runtime installed.Registries:A Docker registry is a repository for Docker images. Docker provides a public registry, called Docker Hub, where you can find and share images with the broader Docker community. You can also create and manage your own private registries to store and share images within your organization.Compose:Docker Compose is a tool for defining and running multi-container Docker applications. With Compose, you can use a YAML file to define the services that make up your application and then use a single command to create and start all of the containers. This makes it easier to manage and coordinate the containers that make up your application.Swarm:Docker Swarm is a tool for orchestrating a cluster of Docker nodes as a single virtual system. With Swarm, you can create a cluster of machines that are running Docker, and then use Swarm to manage and coordinate the containers that are running on the cluster. This allows you to easily scale and manage your application across multiple containers and machines.Here is an example of a simple Dockerfile that can be used to build a Docker image for a Node.js application

# Use the official Node.js 10.x image as the base image FROM node:10 # Create a new directory for our application RUN mkdir -p /usr/src/app # Set the working directory for the rest of the instructions WORKDIR /usr/src/app # Copy the package.json file from the host machine to the container COPY package.json /usr/src/app/ # Install dependencies RUN npm install # Copy the rest of the application code from the host machine to the container COPY . /usr/src/app # Expose port 8080 to the outside world EXPOSE 8080 # Run the Node.js application when the container starts CMD ["npm", "start"]This Dockerfile uses the official Node.js 10.x Docker image as the base image, and then uses a series of instructions to build a new Docker image that includes the necessary dependencies and code to run a Node.js application.

Here is a brief explanation of each line in the Dockerfile:

FROM node:10: This line specifies the base image that will be used to build the Docker image. In this case, we are using the official Node.js 10.x Docker image.RUN mkdir -p /usr/src/app: This line creates a new directory on the Docker image where our application code will be stored.WORKDIR /usr/src/app: This line sets the working directory for the rest of the instructions in the Dockerfile.COPY package.json /usr/src/app/: This line copies thepackage.jsonfile from the host machine to the/usr/src/appdirectory on the Docker image.RUN npm install: This line installs the dependencies listed in thepackage.jsonfile.COPY . /usr/src/app: This line copies the rest of the application code from the host machine to the/usr/src/appdirectory on the Docker image.EXPOSE 8080: This line exposes port 8080 on the Docker image, allowing the application to be accessed on that port from the host machine.CMD ["npm", "start"]: This line specifies the command that will be run when the Docker container starts. In this case, it runs thenpm startcommand, which will start the Node.js application.To build a Docker image using this Dockerfile, you would run the following command:

docker build -t my-nodejs-app .This will build a Docker image using the instructions in the Dockerfile, and give it the tag

my-nodejs-app. You can then run a Docker container using this image with the following command:docker run -p 8080:8080 my-nodejs-appThis will run a Docker container using the

my-nodejs-appimage, and map port 8080 on the host machine to port 8080 on the Docker container. This will allow you to access the Node.js application running in the container on port 8080 on the host machine.

Useful commands for Docker

Here are some useful Docker commands that you may find helpful:

docker build: This command is used to build a Docker image from a Dockerfile. You can specify the path to the Dockerfile and the name and tag for the image using the-tflag. For example:docker build -t my-nodejs-app .docker run: This command is used to run a Docker container from a Docker image. You can specify the name and tag of the image to use, as well as any options or flags. For example:docker run -p 8080:8080 my-nodejs-app

docker ps: This command is used to list the running Docker containers. You can use the-aflag to list all containers, including stopped containers.docker stop: This command is used to stop a running Docker container. You can specify the container ID or name to stop the container. For example:docker stop my-nodejs-appdocker rm: This command is used to remove a stopped Docker container. You can specify the container ID or name to remove the container. For example:

docker rm my-nodejs-app

docker exec: This command is used to run a command in a running Docker container. You can specify the container ID or name, as well as the command to run. For example:

docker exec my-nodejs-app ls

The Future of Docker: Docker and VMs Will Co-exist

It is likely that Docker and virtual machines (VMs) will continue to co-exist in the future, as they both offer different benefits and are suited to different use cases.

Docker is a lightweight and portable technology that is well-suited for building and deploying applications in a distributed environment. Its focus on containers allows developers to package up their applications with all of the necessary dependencies and libraries, and to run those applications on any machine that has the Docker runtime installed. This can make it easier to build, test, and deploy applications, especially in a microservices architecture.

On the other hand, VMs are a more heavy-weight and flexible technology that is well-suited for running multiple operating systems on a single physical machine. This can be useful for testing or development purposes, or for running applications that have specific system requirements. VMs offer more control over the underlying hardware and operating system and can be used to run applications that are not compatible with the host operating system.

Overall, it is likely that Docker and VMs will continue to co-exist in the future, with each technology being used for the situations in which it is best suited. As the use of containers and microservices continues to grow, Docker is likely to become increasingly important, but VMs will still have a place in many environments.