What Is Kubernetes?

Kubernetes (also known as "K8s") is an open-source system for automating the deployment, scaling, and management of containerized applications. It was originally developed by Google and is now maintained by the Cloud Native Computing Foundation (CNCF).

Kubernetes provides a platform-agnostic way to manage containerized applications, making it easier to deploy and run applications in a variety of environments, including on-premises data centres, cloud infrastructure, and hybrid environments. It provides features such as automatic bin-packing, self-healing, horizontal scaling, service discovery and load balancing, secret and configuration management, and much more.

Kubernetes is designed to be extensible, allowing users to customize and add additional functionality through the use of plugins and extensions. It has a large and active developer community, with many companies and organizations contributing to its development and adoption.

Why Kubernetes? What Problem Does K8S Solve?

Kubernetes (also known as "K8s") is a system for automating the deployment, scaling, and management of containerized applications. It helps solve the following problems:

Complexity: Containerized applications can be complex to manage, especially at scale. Kubernetes automates many of the tasks involved in deploying and managing these applications, making it easier to deploy and run them at scale.

Portability: Containerized applications can be difficult to deploy and run on different environments, such as on-premises data centres, cloud infrastructure, and hybrid environments. Kubernetes is platform-agnostic and can run on a variety of environments, making it easier to deploy and run applications in different environments.

Reliability: Containerized applications can be prone to failures, such as due to resource constraints or network issues. Kubernetes provides features such as self-healing and horizontal scaling, which can help improve the reliability and availability of your applications.

This desire of the companies to move from legacy monolith to Microservices has led to the creation of large containerized applications. Each container image in itself is a Microservice that needs to be managed and scaled efficiently with less overhead, this demand to handle thousands and thousands of containers became a tedious task for the organization. This problem led to the evolution of K8s as one of the popular container orchestration tools.

What features Does K8s Offer?

Kubernetes (also known as "K8s") offers a wide range of features for deploying and managing containerized applications, including:

Automatic bin-packing: Kubernetes automatically allocates containers to nodes in a cluster based on resource requirements, allowing you to make the most efficient use of your resources.

Self-healing: Kubernetes can automatically restart failed containers, replace unhealthy ones, and reschedule containers that fail to meet their resource limits.

Horizontal scaling: Kubernetes can automatically scale the number of replicas of a containerized application up or down based on resource utilization or other user-defined metrics.

Service discovery and load balancing: Kubernetes can automatically expose containers as services and provide load balancing for them, making it easier to route traffic to the correct containers.

Secret and configuration management: Kubernetes provides a way to manage sensitive information such as passwords, tokens, and keys, as well as application configuration data, in a secure and centralized manner.

Persistent storage: Kubernetes can provision and manage persistent storage for containers, allowing them to retain data even if the containers are restarted or moved to a different node.

Resource quotas and limits: Kubernetes allows you to set resource limits and quotas for your containers and applications, helping to ensure that they do not consume too many resources and cause issues on the cluster.

These are just a few of the many features offered by Kubernetes. It is designed to be extensible, allowing users to customize and add additional functionality through the use of plugins and extensions.

Kubernetes Architecture

In a Kubernetes (also known as "K8s") cluster, there are two types of nodes: master nodes and worker nodes.

Master nodes: These nodes run the Kubernetes master components, which are responsible for maintaining the desired state of the cluster and reconciling any differences between the desired state and the current state. The master communicates with the nodes in the cluster using the Kubernetes API.

Worker nodes: These nodes run the containers and are managed by the master. They are responsible for executing the tasks assigned to them by the master, such as running containers and responding to requests from the API server.

The master and worker nodes work together to deploy and manage the containerized applications in the cluster. The master node receives requests from users or other systems and communicates with the worker nodes to ensure that the desired state of the cluster is maintained. The worker nodes execute the tasks assigned to them by the master, such as running containers and responding to requests from the API server.

Overall, the basic architecture of a Kubernetes cluster consists of a master node and one or more worker nodes, which work together to deploy and manage containerized applications.

Worker Node In K8s Cluster:

In a Kubernetes (also known as "K8s") cluster, a worker node is a machine that runs the containers and is managed by the master. It is responsible for executing the tasks assigned to it by the master, such as running containers and responding to requests from the API server.

A worker node typically consists of the following components:

Container runtime: This is the software that is responsible for running the containers on the node. Common container runtimes include Docker and contained.

Kubelet: The kubelet is the primary agent that runs on each node and is responsible for communicating with the master and executing tasks assigned by the master. It is responsible for starting and stopping containers, as well as reporting the status of the node to the master.

Kube-proxy: The Kube-proxy is a network proxy that runs on each node and is responsible for forwarding network traffic to the correct pod. It also implements service load balancing and other networking features.

Pod: A pod is the basic unit of deployment in Kubernetes, and represents a single instance of an application. A pod can contain one or more containers, and all containers in a pod share the same network namespace, storage, and other resources.

Containers: Containers are the basic unit of packaging in Kubernetes, and are used to package applications and their dependencies.

Overall, the worker node is responsible for running the containers and executing tasks assigned by the master in a Kubernetes cluster. It consists of several components that work together to manage the containers and communicate with the master.

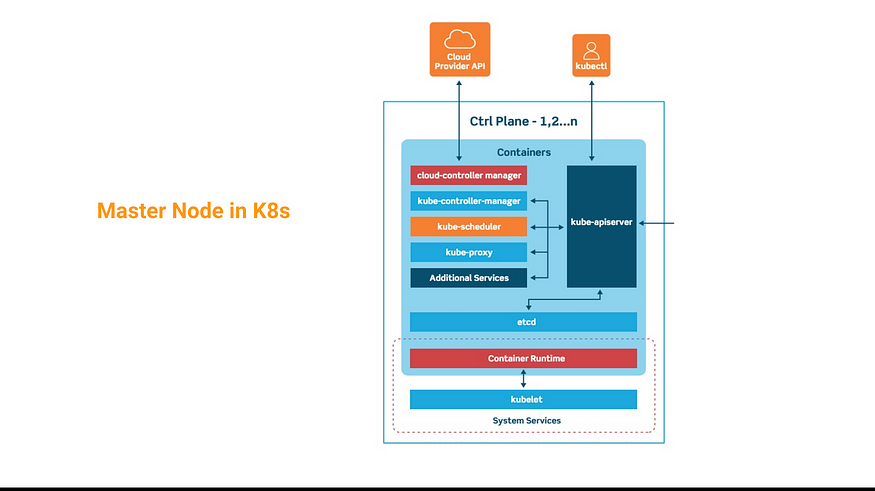

Master Node in K8s cluster:

In a Kubernetes cluster, the master node is the node that manages the cluster. It is responsible for maintaining the desired state of the cluster, such as the desired number and types of pods in each namespace and ensuring that the actual state of the cluster matches the desired state.

The master node consists of several components, including:

etcd: etcd is a distributed key-value store that stores the cluster's configuration data. It is used by the Kubernetes master to store and retrieve the current state of the cluster.

kube-apiserver:

The Kubernetes API server is the main component of the master node. It exposes the Kubernetes API, which can be used by clients (such as kubectl) to communicate with the cluster. It is responsible for serving the API and processing requests from clients.

kube-scheduler: The Kubernetes scheduler is responsible for scheduling pods onto worker nodes. It looks at the resource requirements of each pod and determines which worker node has the capacity to run the pod.

kube-controller-manager: The Kubernetes controller manager is responsible for running controllers that handle tasks such as replicating pods, maintaining the desired number of replicas of a deployment, and reconciling the actual state of the cluster with the desired state.

The master node is a critical component of a Kubernetes cluster, as it is responsible for maintaining the desired state of the cluster and ensuring that the actual state matches the desired state.