How does the Aviator Platform help developers streamline their workflows?

The purpose of this article is to show how the Aviator Platform can be useful for developers

Automating merge is a crucial step in keeping your builds healthy. In this blog post, we'll be discussing how to automate merges to improve your developer workflow.

Let's understand the Monorepo and Polyrepo ,

Monorepo vs Polyrepo :

A monorepo, short for "monolithic repository," is a single repository that contains all of the code for a project or organization. This approach is characterized by a single version control system and a single root directory, with all of the code for the project stored in subdirectories underneath it. Monorepo is often used when multiple teams are working on different parts of a large project and it is easier to manage the codebase in a single place.

A polyrepo, short for "polyrepository," is a collection of multiple repositories that each contain code for different parts of a project or organization. This approach is characterized by multiple version control systems and multiple root directories, with each repository containing code for a specific component or subproject. Polyrepo is often used when teams are working on different components of a project in parallel and it is better to separate the codebase.

Advantages of Monorepo:

Facilitates code sharing and reuse

Improves collaboration and communication

Simplifies dependency management

Reduces duplication of effort

Facilitates centralized testing and deployment

Advantages of Polyrepo:

Provides flexibility and autonomy

Facilitates parallel development

Improves code isolation

Provides better security

It's important to note that each approach has its own advantages and disadvantages, and the choice of approach depends on the size and nature of the project, and the specific needs of the development teams.

When it comes to merging, many people think it's as simple as what it looks like. However, there are many challenges that can arise, particularly when working with large teams and monorepos.

One of the biggest challenges is the possibility of Mainline build failures. This can happen for a variety of reasons :

Complex dependencies

Timeouts

Infrastructure issues

Implicit Conflicts and many more...

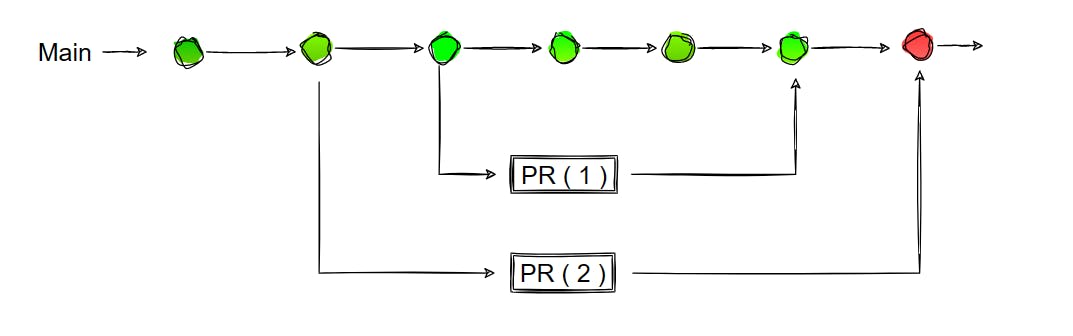

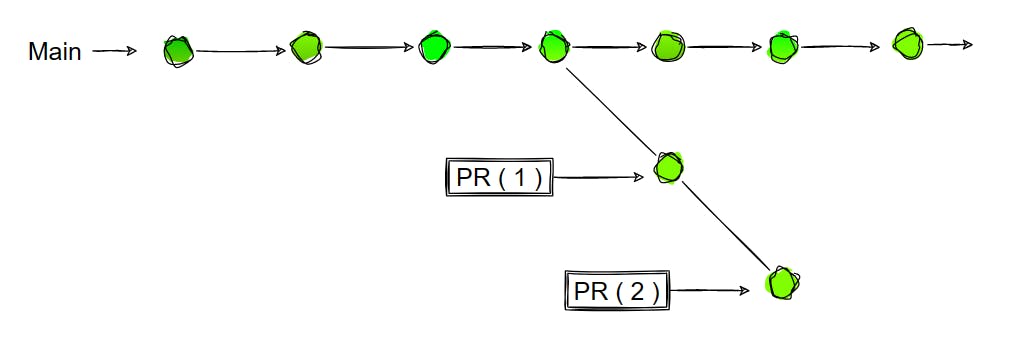

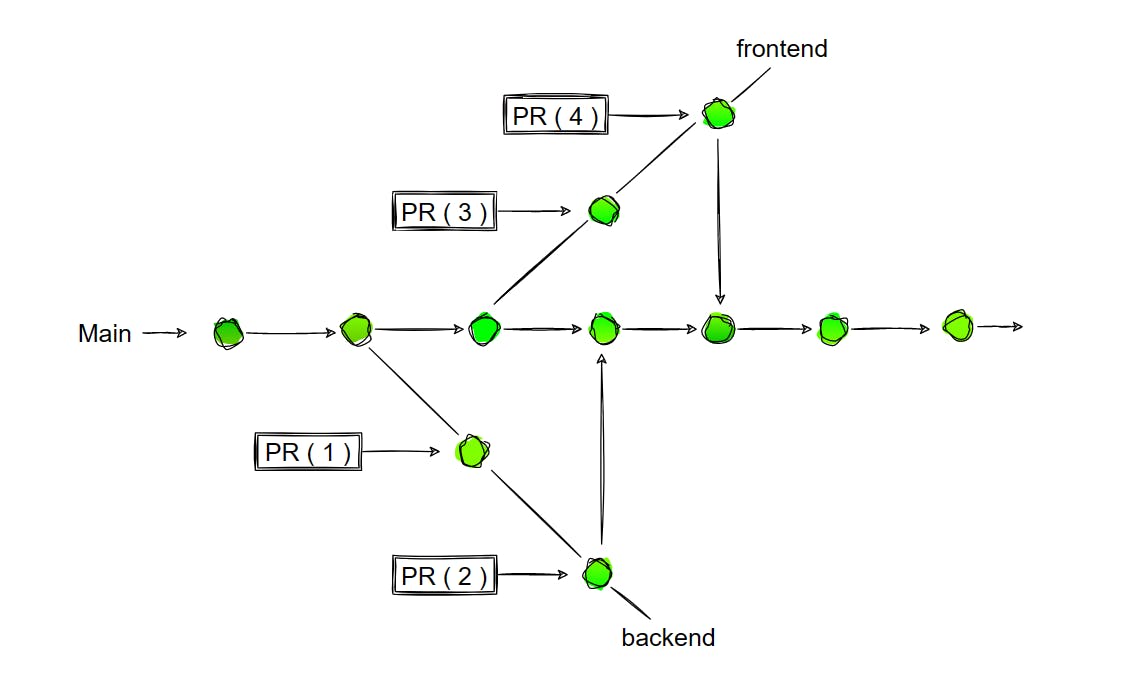

To give an example, let's say there are two pull requests that are merging together in a monorepo. Both of them have passing CI, but when they're finally merged into the Mainline, the build fails. This can may happen because both requests may be modifying the same pieces of code and are not compatible with each other.

As your team grows, these types of issues become more common. Teams may set up their own build queues to ensure someone is responsible for fixing failures, sometimes releases may get delayed and sometime we need to do rollbacks.

It's important to address these challenges in order to improve developer productivity and ensure that builds are healthy. Automating mergers is one way to do this, as it can help identify and resolve conflicts before they cause failures.

Overall, automating merge is a crucial step in keeping your builds healthy and improving your developer workflow. With the right tools and processes in place, you can ensure that your team is able to work efficiently and avoid delays caused by Mainline build failures.

How do we solve this problem?

The solution to these challenges is merge automation.

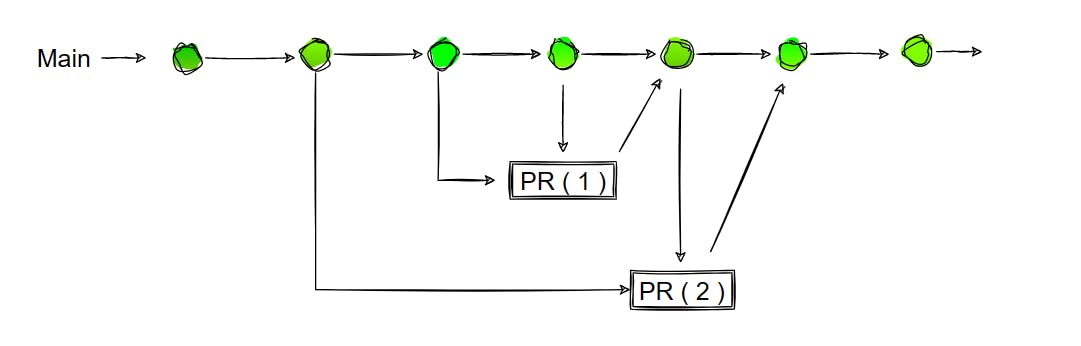

In merge automation, instead of developers manually merging changes, they inform the system that the pull request is ready. The system then automatically merges the latest Main into the pull request and runs the CI. This ensures that the changes are always validated with the most recent, preventing conflicts and other issues.

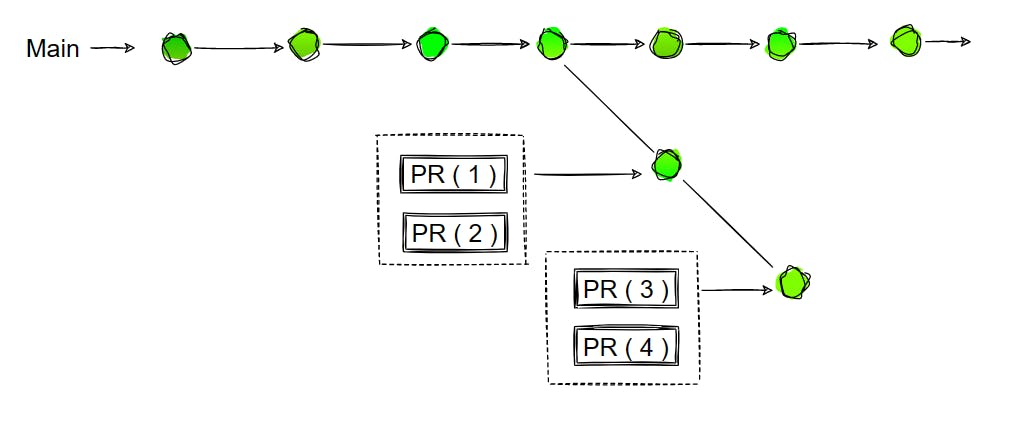

Batching Changes:

One way to improve merge automation further is by batching changes. Instead of merging one pull request at a time, the system can wait for a few pull requests to be collected before running the CI. This reduces the number of CI runs and the wait time for merge. In case of failure, the system can bisect the batches to identify the pull request causing the failure.

Merge in parallel Way...

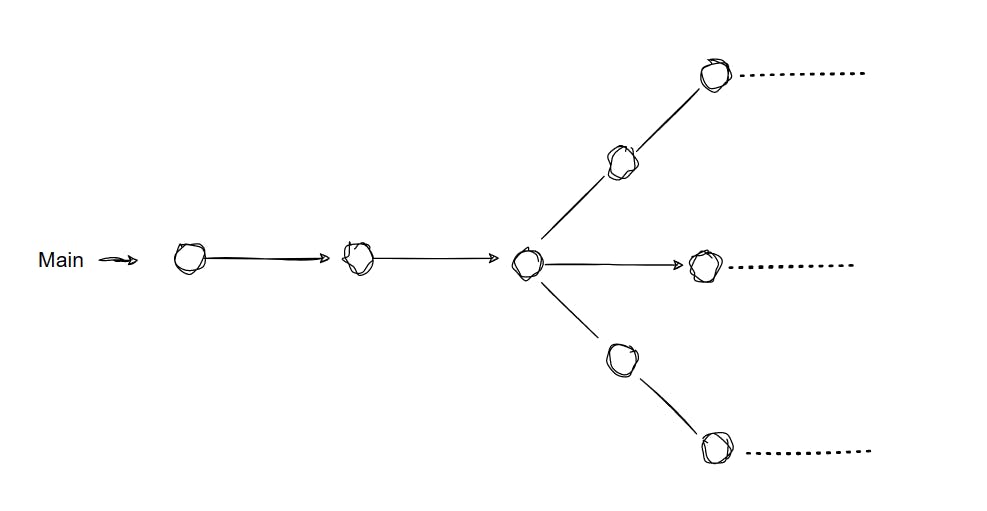

Another way to improve merge automation is by thinking of merge in a parallel Way. Instead of thinking of Main as a linear path, think of it as several potential features that Main can represent. This means that multiple merge requests can be processed simultaneously, reducing the time it takes to merge changes.

Optimistic Queues:

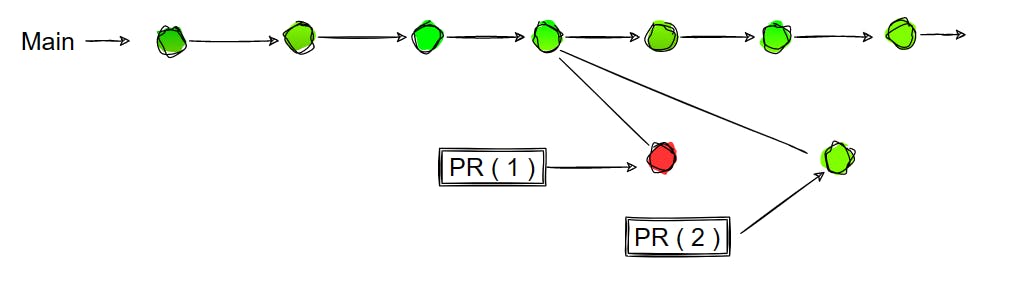

One way to further improve merge automation is through the use of optimistic merging. In this approach, instead of waiting for a pull request to be fully tested and pass CI before merging it, the system optimistically assumes that the pull request will pass and begins the merge process.

To give an example, let's say the current Main is at a certain point and a new pull request (PR) is ready to be merged. Similar to the previous examples, the system would pull the latest Mainline and create an alternate Main branch where the CI is run. However, in this case, while the CI is running on the first PR, a second PR comes in. Instead of waiting for the first CI to pass, the system optimistically assumes that the first PR will pass and starts a new CI on the second PR as well. Once both PRs pass their respective CIs, they are both merged.

Now, in the event that the CI for the first PR fails, the system would reject the alternate Main and create a new alternate Main where the rest of the changes are run. This process ensures that the failed PR does not cause a build failure and the rest of the changes can continue to be tested and merged.

Optimistic merging can help improve merge time and efficiency by allowing multiple pull requests to be processed simultaneously. However, it's important to note that if the assumption that PR will pass is wrong, it can lead to a build failure and additional time spent resolving the issue.

Batching Optimistic Queues:

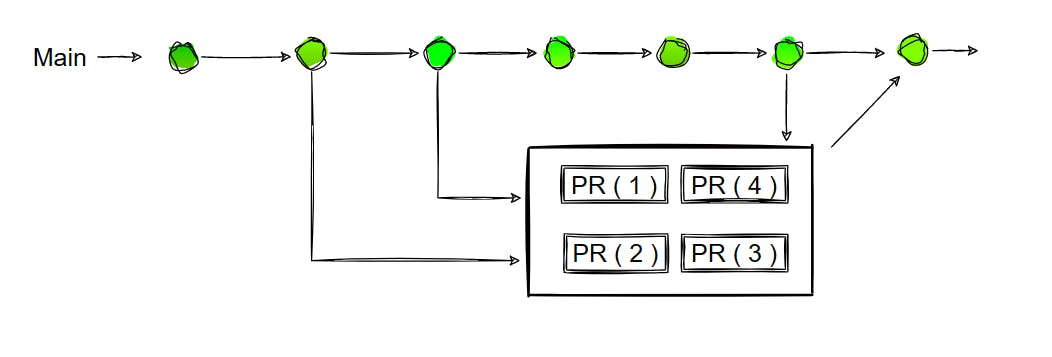

One way to further optimize merge automation is by combining different strategies that have been discussed. One example is combining the strategy of optimistic merging with batching. Instead of running a CI on every individual PR, this approach combines multiple PRs into batches and runs a CI on the entire batch. If the batch passes, all PRs within the batch are merged. However, if the batch fails, the system can split up the batch and identify which PR caused the failure.

This approach can help improve merge time and efficiency by reducing the number of CI that need to be run and also the wait time, while also reducing the risk of build failure by identifying the specific PR that caused the failure.

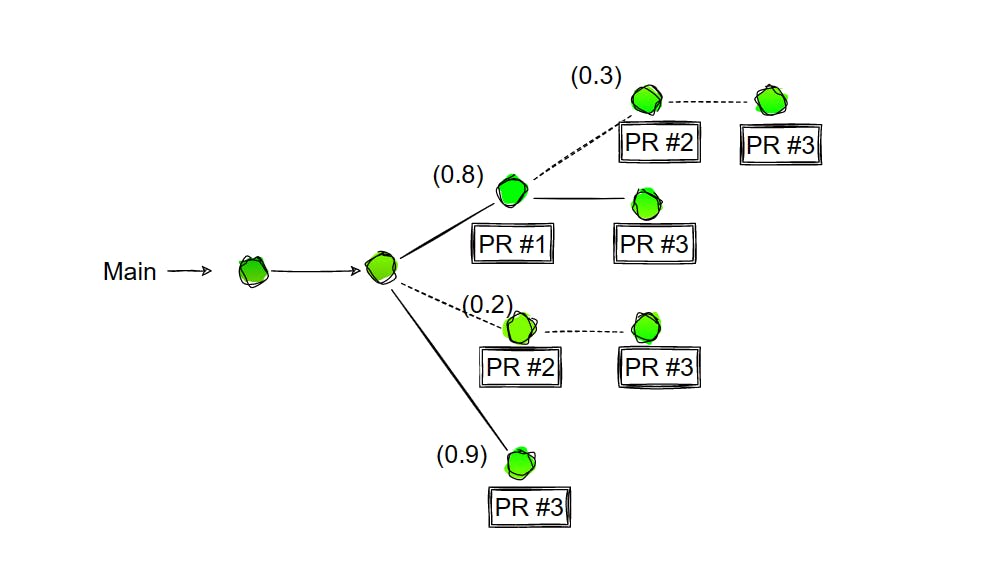

Predictive Modeling:

Another concept to consider in merge automation is using predictive or optimized strategies. Instead of assuming all possible scenarios of what the main branch could look like if a particular PR or CI passes or fails.

This approach allows the system to identify which paths or scenarios are worth pursuing, and which ones are not, by setting a cutoff score.

This approach can help optimize merge automation by reducing the number of CIs that need to be run, improving merge time and efficiency, and reducing the risk of build failure. However, it's important to note that this approach can have its own set of challenges. It requires careful consideration of how to set the cutoff score and how to weigh the different factors that are being used to calculate the score in order to ensure that it is accurate and effective.

This can help to improve the efficiency of the merge process by allowing different parts of the codebase to be compiled and run independently. By doing this, teams can ensure that changes to one part of the codebase do not negatively impact the builds of other parts of the codebase.

By using multiple queues...

One way to implement this is by separating different PR's into different queues based on their target, such as back-end and front-end. This way, teams can run the PR's parallelly and merge them without impacting the builds. This can be a great way to improve the efficiency of the merge process and keep builds healthy.

By using multiple queues, teams can also optimize the merge process by only running CI on the most important PR's.This can help to reduce the number of CI runs, which can save time and resources.

In summary, merge automation and using multiple queues can be a great way to improve the efficiency of the merge process and keep builds healthy. By separating different PR's into different queues, teams can run them parallelly, and merge them without impacting the builds.

Merging Challenges in Polyrepo:

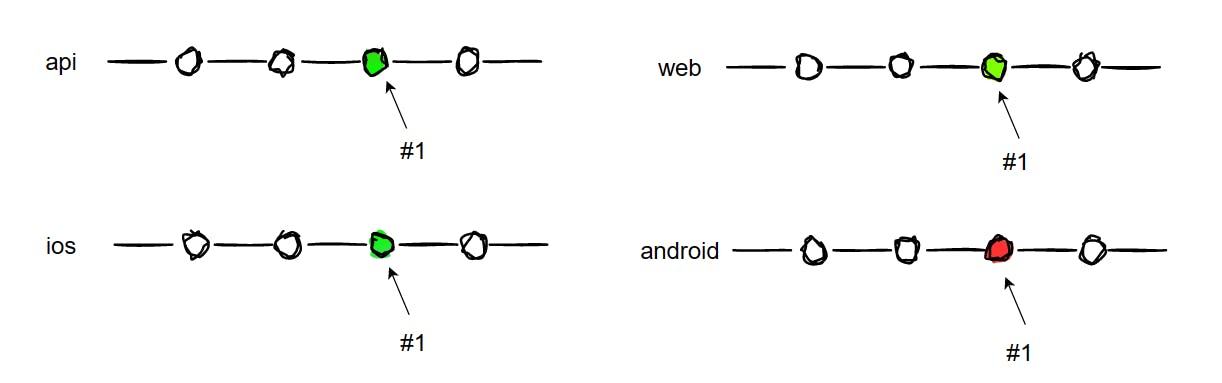

In today's fast-paced software development environment, managing and merging pull requests (PRs) can be a daunting task. With multiple teams working on different projects and features, it's important to ensure that changes made to one repository do not negatively impact other projects. This is where polyrepo support comes in.

A polyrepo is a scenario where you have different repositories for different types of projects. For example, you may have a separate repository for your API, web, iOS, and Android projects. In this scenario, modifying an API can also impact how it interacts with the web, iOS, and Android projects.

The challenge with this scenario is that if you merge three of the PRs but one of them fails, it can cause eventual inconsistency. To address this issue, Aviator system has a workflow called chain sets. This workflow essentially combines or defines dependencies of changes across the repositories, so you consider them as a single atomic unit. This ensures that all of these PRs either merge together or none of them merge.

In this way, our system helps to ensure consistency and prevent negative impacts across different projects, even when managing multiple repositories. This is a powerful tool for managing pull requests and helps teams to work more efficiently and effectively.

flaky test Management:

A flaky test is a type of software testing where a test that passes or fails randomly without any logical reason. This means that even though the code under test has not been changed, the test results may vary from run to run. Flaky tests can occur due to various reasons such as race conditions, time-dependent code, and environment-dependent code. They can make the testing process unreliable, resulting in false positives or negatives and delaying the release of software.

In today's fast-paced development environment, managing flaky tests is a common challenge. Flaky tests are those that may pass or fail randomly, even though no changes have been made to the codebase that would affect the test. These tests can make the system unstable and cause inconsistencies in test results, making it difficult to identify if a failure is due to a code change or a flaky test.

To address this challenge, our system provides a way to manage flaky tests. By identifying all the flaky tests in your runs, Aviator system provides a customized check that you can use to automatically merge changes. This check is used as a validation to ensure that your systems are still healthy, even if there are flaky tests present.

When a flaky test is identified, Aviator system first checks to see if the test failure is related to your changes or not. If it is not related, the test is suppressed in the test report, ensuring that you get a clean build health. This allows you to parse your changes and make sure that the system is still healthy, even with flaky tests present. This works very well when you're thinking about automatic merging because you can use this check as a validation to make sure your systems are still healthy.

In conclusion, managing flaky tests is a critical aspect of software development, and our system provides a way to handle it effectively. By identifying flaky tests and providing a customized check to automatically merge changes, our system ensures that your systems are still healthy and that the development process can continue smoothly.

Managing Stacked PRs:

A "managed stack" of PRs refers to a system or workflow that organizes and manages the merging of pull requests (PRs) in a software development process. This system can include strategies such as merge automation, batching, and predicting and scoring potential merge scenarios to optimize the process and ensure the build remains stable and consistent. "Chain sets" is one example of a feature that can be used to manage stack of PRs, which essentially defines and combines dependencies of changes across different repositories, so that they can be considered as single Atomic unit and make sure all of these PRs either merge all together or none of them merge.

In software development, it's not uncommon for multiple pull requests (PRs) to be stacked on top of one another. This can happen when a change in one PR is dependent on changes made in another PR. In such cases, it can be challenging to manage and merge these PRs in a way that ensures a clean and consistent build.

To tackle this challenge, Aviator offers capabilities to manage stacked PRs. We have features that allow you to automatically merge these changes, as well as a command-line interface (CLI) to sync all your stacked PRs together. This process follows similar patterns to our chain set feature, where we automatically identify dependencies among all these stacked PRs and consider them as atomic units. This means that all of them will merge together or none of them will merge at all. This helps ensure a clean and consistent build, and makes it easier for you to manage your PRs.

Conclusion:

In conclusion, Aviator automation is a powerful tool for managing code changes in a software development team. There are several strategies and techniques that can be used to optimize the merge process, such as merge queues, batching, optimistic quese, and predictive modeling.

Aviator system also provides capabilities to manage flaky tests, poly-repositories, and stack PRs. With these tools, teams can ensure that changes are validated with the most recent main, reduce merge time, and minimize the risk of build failures. Furthermore, by using a combination of these strategies, teams can further optimize their merge process and improve the overall efficiency and stability of their systems.

References:

Automate merging to keep builds healthy at scale , Ankit Jain

Flaky tests: How to manage them practically

In the future, We will see more articles on how to configure it and use it effectively.