Kubernetes is an open-source platform for automating the deployment, scaling, and management of containerized applications. It provides a way to deploy and run applications in a consistent, reliable manner across a distributed infrastructure, such as a cluster of servers or virtual machines.

Here's a real-life example of how Kubernetes might be used:

Imagine you are a software developer working on a new web application. You have written the code and tested it locally on your computer, and now you want to deploy it to a production environment where it can be accessed by users.

To do this, you might use Kubernetes to deploy the application to a cluster of servers or virtual machines. You could create a container image of your application using a tool like Docker, and then use Kubernetes to deploy the container to the cluster.

Once the application is deployed, Kubernetes can help you manage it by performing tasks such as scaling the number of containers up or down to meet demand, rolling out new versions of the application, and handling failures or crashes of individual containers.

Kubernetes also provides tools for monitoring and logging, so you can track the performance and health of your application and troubleshoot any issues that arise.

Overall, Kubernetes offers a powerful platform for deploying and managing applications at scale, allowing developers to focus on building and improving their applications rather than worrying about the underlying infrastructure.

First, lets look at how hardware is represented

Hardware :

Nodes

Here's a real-life example of how nodes might be used in a Kubernetes cluster:

Imagine you are a system administrator responsible for managing a cluster of servers that are running a number of web applications. You have decided to use Kubernetes to manage the deployment and scaling of these applications.

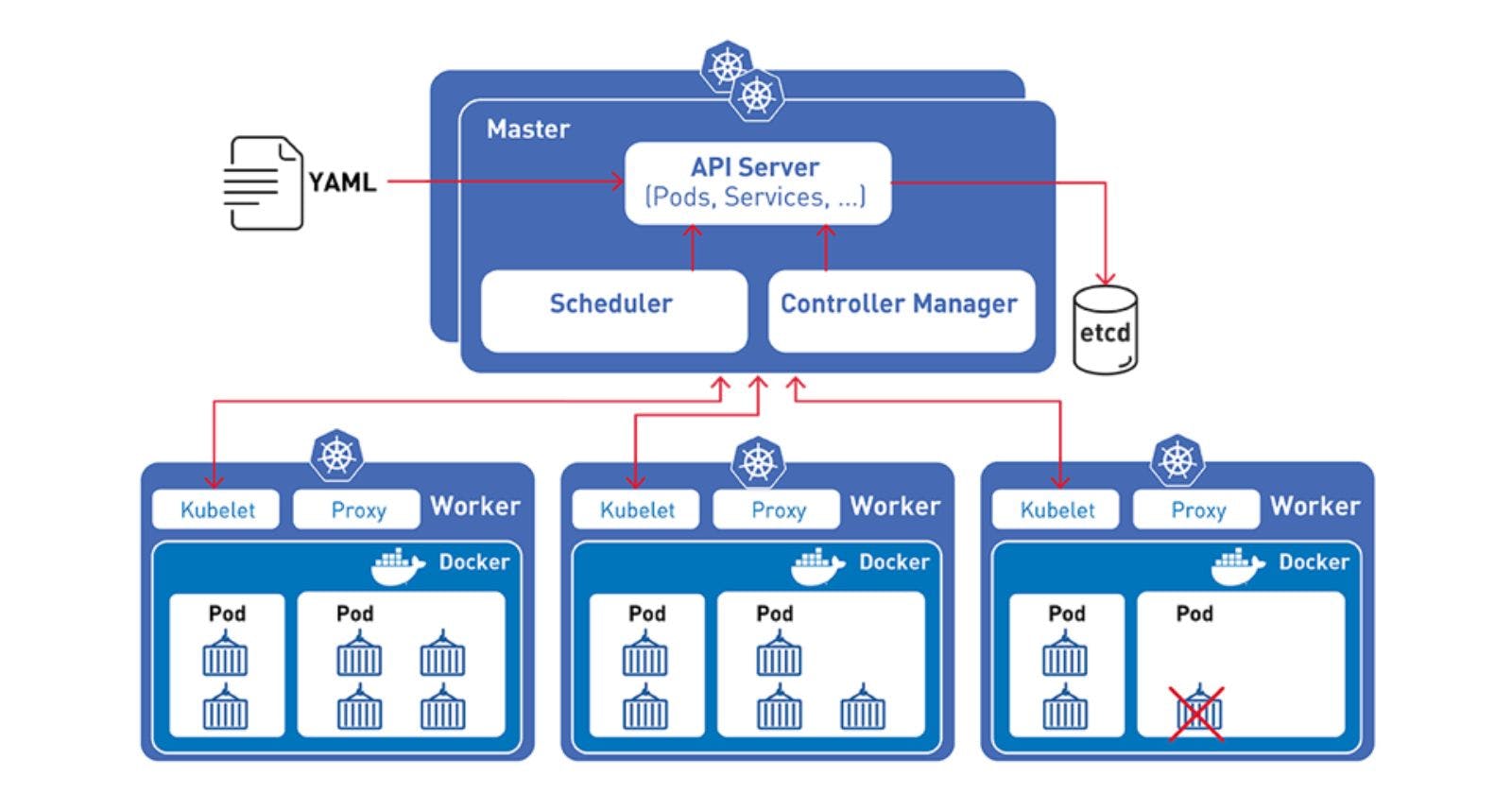

To do this, you might set up a number of physical or virtual servers as nodes in the Kubernetes cluster. Each node would be configured with a container runtime, such as Docker, and a kubelet agent to communicate with the Kubernetes master.

When you deploy an application to the cluster, the containers that make up the application are scheduled to run on one or more of the nodes. The Kubernetes scheduler determines which node to place a given container on based on the available resources on each node and any constraints or rules that have been specified for the container.

As users access the web application, the demand for resources may increase. In this case, Kubernetes can automatically scale the number of replicas of the application's containers up or down to meet the demand, by adding or removing containers from the nodes as needed.

Overall, the nodes in a Kubernetes cluster play a key role in executing and hosting the containers that make up the applications and services deployed to the cluster. They allow you to deploy and manage your applications in a consistent and reliable manner across a distributed infrastructure.

In Kubernetes, a node is a worker machine that runs one or more pods and is used to execute and host the containers of an application. Nodes are managed by the Kubernetes master and are responsible for running the applications and services that are deployed to the cluster.

A node can be a physical machine or a virtual machine, and it typically runs a container runtime, such as Docker or container, to manage the containers that are deployed to it. The node also runs a kubelet, which is an agent that communicates with the Kubernetes master and ensures that the desired state of the containers on the node is maintained.

When you deploy an application to a Kubernetes cluster, the application's containers are scheduled to run on one or more nodes. The Kubernetes scheduler determines which node to place a given container on based on a variety of factors, including the available resources on each node and any constraints or rules that have been specified for the container.

Overall, nodes play a key role in the Kubernetes architecture, as they are responsible for running the applications and services that make up the cluster.

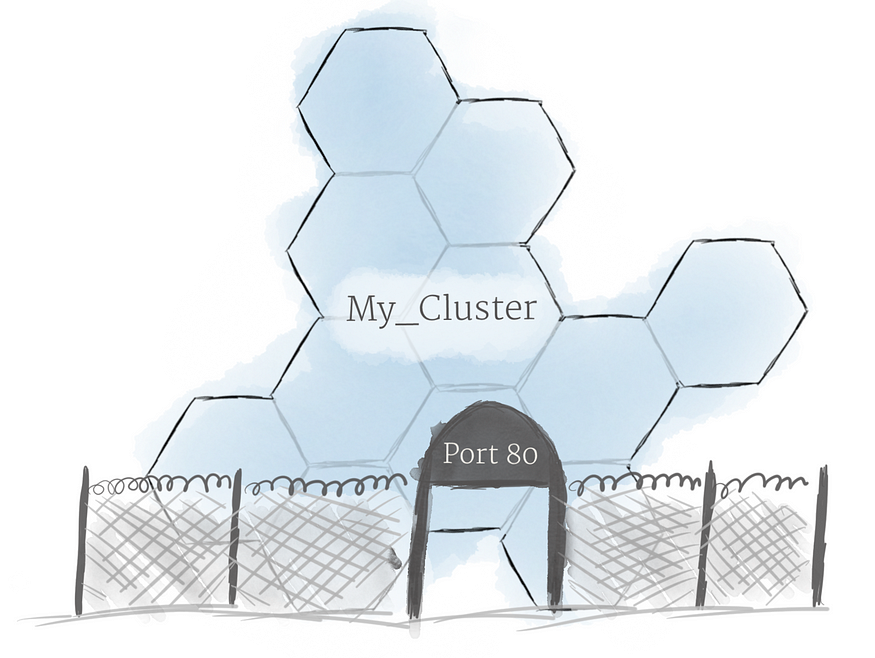

The Cluster

In Kubernetes, a cluster is a set of nodes that are managed by the Kubernetes master and used to run and host the containers of an application. A cluster can be composed of physical servers, virtual machines, or a combination of both, and it can span multiple data centers or cloud regions.

Here's an example of how a Kubernetes cluster might be used:

Imagine you are a software developer working on a new web application. You have written the code and tested it locally on your computer, and now you want to deploy it to a production environment where it can be accessed by users.

To do this, you might set up a Kubernetes cluster consisting of a number of physical or virtual servers. You could create a container image of your application using a tool like Docker, and then use Kubernetes to deploy the container to the cluster.

Once the application is deployed, Kubernetes can help you manage it by performing tasks such as scaling the number of containers up or down to meet demand, rolling out new versions of the application, and handling failures or crashes of individual containers.

Kubernetes also provides tools for monitoring and logging, so you can track the performance and health of your application and troubleshoot any issues that arise.

Overall, a Kubernetes cluster provides a platform for deploying and managing applications at scale, allowing developers to focus on building and improving their applications rather than worrying about the underlying infrastructure.

Persistent Volumes

In Kubernetes, a persistent volume (PV) is a piece of storage that is provisioned by an administrator and made available to pods for use as storage. PVs are used to provide long-term storage for applications, and they can be used to store data that needs to persist beyond the lifetime of a single pod.

Here's an example of how a PV might be used in Kubernetes:

Imagine you are running a web application that stores user data in a database. You want to ensure that the data is persisted even if the pods running the application are restarted or moved to a different node.

To do this, you could create a PV to store the data from the database. The PV would be provisioned with a specific amount of storage and would be mounted to the pods running the web application.

When the application writes data to the PV, it will be persisted on the underlying storage infrastructure, even if the pods are restarted or moved to a different node. This allows you to maintain the data in the event of pod failures or other disruptions.

Overall, PVs provide a way to store data in a persistent manner in a Kubernetes cluster, ensuring that data can be retained even if the pods that are using it are restarted or moved.

Software

Containers

Containers are a way to package and run applications in a lightweight, portable, and isolated environment. In Kubernetes, containers are used to run the applications and services that make up a cluster.

Here's an example of how containers might be used in Kubernetes:

Imagine you are a software developer working on a new web application. You have written the code and tested it locally on your computer, and now you want to deploy it to a production environment where it can be accessed by users.

To do this, you might create a container image of your application using a tool like Docker. A container image is a lightweight, standalone, executable package that includes everything needed to run the application, including the code, libraries, dependencies, and runtime.

Once you have created the container image, you can use Kubernetes to deploy it to a cluster of servers or virtual machines. Kubernetes will create one or more containers from the image and run them on the cluster, allowing the application to be accessed by users.

Kubernetes can also help you manage the containers by performing tasks such as scaling the number of containers up or down to meet demand, rolling out new versions of the application, and handling failures or crashes of individual containers.

Overall, containers provide a way to package and run applications in a consistent and portable manner in a Kubernetes cluster, allowing you to deploy and manage your applications in a reliable and scalable way.

Pods

In Kubernetes, a pod is a logical host for one or more containers. Pods are the basic building blocks of a Kubernetes cluster, and they are used to deploy and run applications and services.

Here's an example of how pods might be used in Kubernetes:

Imagine you are a software developer working on a new web application. You have written the code and tested it locally on your computer, and now you want to deploy it to a production environment where it can be accessed by users.

To do this, you might create a container image of your application using a tool like Docker, and then use Kubernetes to deploy the container to a cluster of servers or virtual machines.

Kubernetes will create one or more pods to host the containers of the application. Each pod consists of one or more containers that are co-located and share resources such as networking and storage.

Once the pods are created, Kubernetes can help you manage them by performing tasks such as scaling the number of pods up or down to meet demand, rolling out new versions of the application, and handling failures or crashes of individual pods.

Overall, pods provide a way to deploy and manage the containers that make up an application or service in a Kubernetes cluster, allowing you to run and scale your applications in a consistent and reliable manner.

Deployments

In Kubernetes, a deployment is a higher-level resource that manages a set of replicas of a pod or set of pods. Deployments are used to deploy and manage the applications and services that run in a Kubernetes cluster.

Here's an example of how a deployment might be used in Kubernetes:

Imagine you are a software developer working on a new web application. You have written the code and tested it locally on your computer, and now you want to deploy it to a production environment where it can be accessed by users.

To do this, you might create a container image of your application using a tool like Docker, and then use Kubernetes to deploy the container to a cluster of servers or virtual machines.

To deploy the application, you would create a deployment resource in Kubernetes. The deployment would specify the number of replicas of the application's pods that you want to run, as well as any other configuration options such as the container image to use, resource limits, and network policies.

Kubernetes would then use the deployment to manage the replicas of the application's pods. It would ensure that the desired number of replicas is running at all times, and it would handle tasks such as rolling out new versions of the application, scaling the number of replicas up or down to meet demand, and handling failures or crashes of individual pods.

Overall, deployments provide a way to deploy and manage applications and services in a Kubernetes cluster, allowing you to run and scale your applications in a consistent and reliable manner.

Ingress

In Kubernetes, an ingress is a collection of rules that allow inbound connections to reach the cluster services. It acts as a reverse proxy, routing traffic from external sources to the appropriate service within the cluster.

Here's an example of how an ingress might be used in Kubernetes:

Imagine you have deployed a number of applications and services to a Kubernetes cluster, and you want to expose these services to external users over the Internet.

To do this, you could create an ingress resource in Kubernetes. The ingress would specify the rules for routing traffic to the appropriate service within the cluster. For example, you could route traffic for example.com to a service that serves a web application, and traffic for api.example.com to a service that serves an API.

The ingress would also specify the type of load balancer to use, such as a Network Load Balancer (NLB) or an Application Load Balancer (ALB), and it would configure the load balancer with the appropriate rules for routing traffic.

Once the ingress is created, external users can access the services within the cluster by sending requests to the load balancer. The load balancer will route the requests to the appropriate service based on the rules specified in the ingress.

Overall, ingresses provide a way to expose the services within a Kubernetes cluster to external users, allowing you to easily access and manage your applications and services from outside the cluster.

Resources :